Optimizing Model Performance: A Guide to Hyperparameter Tuning in Python with Keras

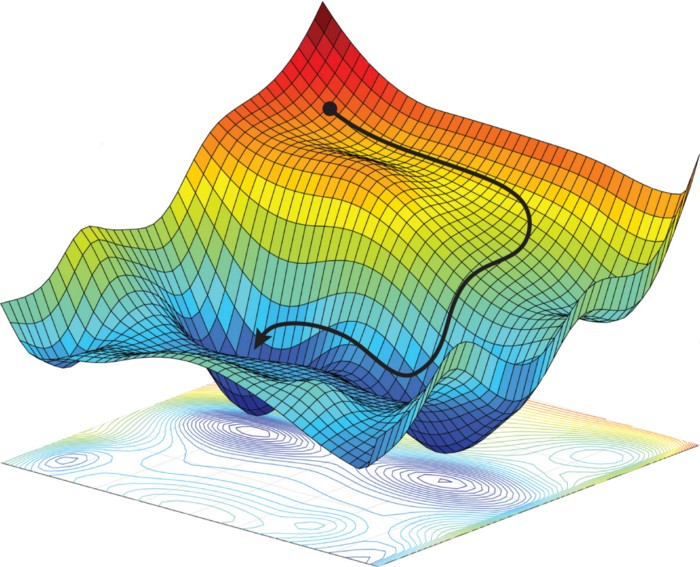

Hyperparameter tuning is the process of selecting the best set of hyperparameters for a machine learning model to optimize its performance. Hyperparameters are values that cannot be learned from the data, but are set by the user before training the model. Examples of hyperparameters include learning rate, batch size, number of hidden layers, and number of neurons in each hidden layer.

Optimizing hyperparameters is important because it can significantly improve the performance of a machine learning model. However, it can be a time-consuming and computationally expensive process.

In this tutorial, we will use Python to demonstrate how to perform hyperparameter tuning using the Keras library.

Hyperparameter Tuning in Python with Keras

Import Libraries

We will start by importing the necessary libraries, including Keras for building the model and scikit-learn for hyperparameter tuning.

import numpy as np

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers import Dense, Dropout

from keras.utils import to_categorical

from keras.optimizers import Adam

from sklearn.model_selection import RandomizedSearchCV

Load Data

Next, we will load the MNIST dataset for training and testing the model.

# Load data

(x_train, y_train), (x_test, y_test) = mnist.load_data()

# Normalize data

x_train = x_train.astype('float32') / 255.

x_test = x_test.astype('float32') / 255.

# Flatten data

x_train = x_train.reshape((len(x_train), np.prod(x_train.shape[1:])))

x_test = x_test.reshape((len(x_test), np.prod(x_test.shape[1:])))

# One-hot encode labels

y_train = to_categorical(y_train)

y_test = to_categorical(y_test)

In this example, we load the MNIST dataset and normalize and flatten the data. We also one-hot encode the labels.

Build Model

Next, we will build the model.

# Define model

def build_model(learning_rate=0.01, dropout_rate=0.0, neurons=64):

model = Sequential()

model.add(Dense(neurons, activation='relu', input_shape=(784,)))

model.add(Dropout(dropout_rate))

model.add(Dense(neurons, activation='relu'))

model.add(Dropout(dropout_rate))

model.add(Dense(10, activation='softmax'))

optimizer = Adam(lr=learning_rate)

model.compile(loss='categorical_crossentropy', optimizer=optimizer, metrics=['accuracy'])

return model

In this example, we define the model with three layers, including two hidden layers with a user-defined number of neurons and a dropout layer for regularization.

Perform Hyperparameter Tuning

Next, we will perform hyperparameter tuning using scikit-learn’s RandomizedSearchCV function.

# Define hyperparameters

params = {

'learning_rate': [0.01, 0.001, 0.0001],

'dropout_rate': [0.0, 0.1, 0.2],

'neurons': [32, 64, 128],

'batch_size': [32, 64, 128]

}

# Create model

model = build_model()

# Perform hyperparameter tuning

random_search = RandomizedSearchCV(model, param_distributions=params, cv=3)

random_search.fit(x_train, y_train)

# Print best hyperparameters

print(random_search.best_params_)

In this example, we define a dictionary of hyperparameters and their values to be tuned. We then create the model and perform hyperparameter tuning using RandomizedSearchCV with a 3-fold cross-validation. Finally, we print the best hyperparameters found during the tuning process.

Evaluate Model

Once we have found the best hyperparameters, we can build the final model with those hyperparameters and evaluate its performance on the testing data.

# Build final model with best hyperparameters

best_learning_rate = random_search.best_params_['learning_rate']

best_dropout_rate = random_search.best_params_['dropout_rate']

best_neurons = random_search.best_params_['neurons']

model = build_model(learning_rate=best_learning_rate, dropout_rate=best_dropout_rate, neurons=best_neurons)

# Train model

model.fit(x_train, y_train, batch_size=128, epochs=10, validation_data=(x_test, y_test))

# Evaluate model on testing data

score = model.evaluate(x_test, y_test, verbose=0)

print('Test loss:', score[0])

print('Test accuracy:', score[1])

In this example, we build the final model with the best hyperparameters found during hyperparameter tuning. We then train the model and evaluate its performance on the testing data.

In this tutorial, we covered the basics of hyperparameter tuning and how to perform it using Python with Keras and scikit-learn. By tuning the hyperparameters, we can significantly improve the performance of a machine learning model. I hope you found this tutorial useful in understanding how to optimize model performance through hyperparameter tuning.

Lyron Foster is a Hawai’i based African American Author, Musician, Actor, Blogger, Philanthropist and Multinational Serial Tech Entrepreneur.