AutoML: Automated Machine Learning in Python

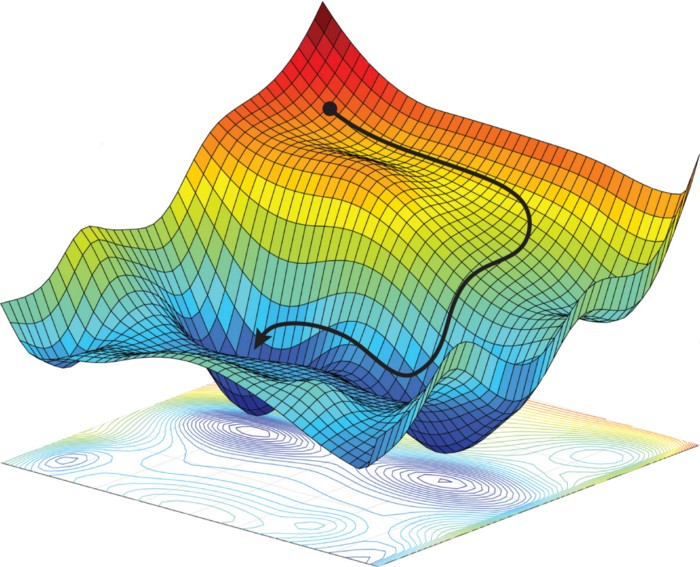

AutoML (Automated Machine Learning) is a branch of machine learning that uses artificial intelligence and machine learning techniques to automate the entire machine learning process. AutoML automates tasks such as data preparation, feature engineering, algorithm selection, hyperparameter tuning, and model evaluation. AutoML enables non-experts to build and deploy machine learning models with minimal effort and technical knowledge.

Automated Machine Learning in Python

Python is a popular language for machine learning, and several libraries support AutoML. In this tutorial, we will use the H2O library to perform AutoML in Python.

Install Library

We will start by installing the H2O library.

pip install h2o

Import Libraries

Next, we will import the necessary libraries, including H2O for AutoML, and NumPy and Pandas for data processing.

import numpy as np

import pandas as pd

import h2o

from h2o.automl import H2OAutoML

Load Data

Next, we will load the data to train the AutoML model

# Load data

url = "https://archive.ics.uci.edu/ml/machine-learning-databases/iris/iris.data"

data = pd.read_csv(url, header=None, names=['sepal_length', 'sepal_width', 'petal_length', 'petal_width', 'class'])

# Convert data to H2O format

h2o.init()

h2o_data = h2o.H2OFrame(data)

In this example, we load the Iris dataset from a URL and convert it to the H2O format.

Train AutoML Model

Next, we will train an AutoML model on the data.

# Train AutoML model

aml = H2OAutoML(max_models=10, seed=1)

aml.train(x=['sepal_length', 'sepal_width', 'petal_length', 'petal_width'], y='class', training_frame=h2o_data)

In this example, we train an AutoML model with a maximum of 10 models and a random seed of 1.

View Model Leaderboard

Next, we can view the leaderboard of the trained models.

# View model leaderboard

lb = aml.leaderboard

print(lb)

In this example, we print the leaderboard of the trained models.

Test AutoML Model

Finally, we can use the trained AutoML model to make predictions on new data.

# Test AutoML model

test_data = pd.DataFrame(np.array([[5.1, 3.5, 1.4, 0.2], [7.7, 3.0, 6.1, 2.3]]), columns=['sepal_length', 'sepal_width', 'petal_length', 'petal_width'])

h2o_test_data = h2o.H2OFrame(test_data)

preds = aml.predict(h2o_test_data)

print(preds)

In this example, we use the trained AutoML model to predict the class of two new data points.

In this tutorial, we covered the basics of AutoML and how to use it in Python to automate the entire machine learning process. AutoML enables non-experts to build and deploy machine learning models with minimal effort and technical knowledge. I hope you found this tutorial useful in understanding AutoML in Python.

Lyron Foster is a Hawai’i based African American Author, Musician, Actor, Blogger, Philanthropist and Multinational Serial Tech Entrepreneur.